guy recently linked this essay, its old, but i don’t think its significantly wrong (despite gpt evangelists) also read weizenbaum, libs, for the other side of the coin

guy recently linked this essay, its old, but i don’t think its significantly wrong (despite gpt evangelists) also read weizenbaum, libs, for the other side of the coin

As a REDACTED who has published in a few neuroscience journals over the years, this was one of the most annoying articles I’ve ever read. It abuses language and deliberately misrepresents (or misunderstands?) certain terms of art.

As an example,

That is all well and good if we functioned as computers do, but McBeath and his colleagues gave a simpler account: to catch the ball, the player simply needs to keep moving in a way that keeps the ball in a constant visual relationship with respect to home plate and the surrounding scenery (technically, in a ‘linear optical trajectory’). This might sound complicated, but it is actually incredibly simple, and completely free of computations, representations and algorithms.

The neuronal circuitry that accomplishes the solution to this task (i.e., controlling the muscles to catch the ball), if it’s actually doing some physical work to coordinate movement in in a way that satisfies the condition given, is definitionally doing computation and information processing. Sure, there aren’t algorithms in the usual way people think about them, but the brain in question almost surely has a noisy/fuzzy representation of its vision and its own position in space if not also that of the ball they’re trying to catch.

For another example,

no image of the dollar bill has in any sense been ‘stored’ in Jinny’s brain

Maybe there’s some neat philosophy behind the seemingly strategic ignorance of precisely what certain terms of art mean, but I can’t see past the obvious failure to articulate the what the scientific theories in question purport nominally to be able to access it.

help?

The deeper we get in to it the more it just reads as old man yells at cloud and people who want consciousness to be special and interesting being mad that everyone is ignoring them.

Yeah, this is just as insane as the people who think GPT is conscious. I’ve been trying to give a nuanced take down thread (also an academic, with a background in philosophy of science rather than the science itself). I think this resonates with people here because they’re so sick of the California Ideology narrative that we are nothing but digital computers, and that if we throw enough money and processing power at something like GPT, we’ll have built a person.

As someone who also works in the neuroscience field and is somewhat sympathetic to the Gibsonian perspective that Chemero (mentioned in the essay) subscribes to, being able to decode cortical activity doesn’t necessarily mean that the activity serves as a representation in the brain. Firstly, the decoder must be trained and secondly, there is a thing called representational drift. If you haven’t, I highly recommend reading Romain Brette’s paper “Is coding a relevant metaphor for the brain?”

He asks a crucial question, who/what is this representation for? It certainly is a representation for the neuroscientist, since they are the one who presented the stimuli and are then recording the spiking activity immediately after, but that doesn’t imply that it is a representation for the brain. Does it make sense for the brain to encode the outside world, into its own activity (spikes), then to decode it into its own activity again? Are we to assume that another part of this brain then reads this activity to translate into the outside world? This is a form a dualism.

being able to decode cortical activity doesn’t necessarily mean that the activity serves as a representation in the brain

I’m sorry: I don’t mean to be an ass, but this seems nonsensical to me. Definitionally, being able to decode some neuronal signals means that those signals carry information about the variable they encode. Thus, if those vectors of simultaneous spike trains are received by any other part of the body in question, then the representation has been communicated.

Firstly, the decoder must be trained and secondly, there is a thing called representational drift.

Why does a decoder needing to be trained for experimental work that reverse engineers neural codes imply that neural correlates of some real world stimulus are not representing that stimulus?

I have a similar issue seeing how representational drift invalidates that idea as well, especially since the circuits receiving the signals in question are plastic and dynamically adapt their responses to changes in their inputs as well.

I started reading Brette’s paper that you recommended, and I’m finding the same problems with Romain’s idea salad. He says things like, "Climate scientists, for example, rarely ask how rain encodes atmospheric pressure. "

and while I think that’s not exactly the terminology they use, in the sense that they might model rain = couplingFunction(atmospheric pressure) + noise, they’re in fact mathematically asking that very question!

Am I nit-picking or is this not an example of Brette doing the same deliberate misunderstanding of the communications metaphor as the article in the original post?

Does it make sense for the brain to encode the outside world, into its own activity (spikes), then to decode it into its own activity again?

It might?, but the question seems computationally silly. I would expect efferent circuitry receiving signals encoded as vectors of simultaneous spikes would not do extra work to try to re-map the lossy signal they’re receiving into the original stimulus space. Perhaps they’d do some other transformations on it to integrate it with other information, but why would circuitry that was grown by STDP undo the effort of the earlier populations of neurons involved in the initial compression?

sorry again if my stem education is preventing me from seeing meaning through a forest of mixed and imprecisely applied metaphors

I’m going to go read Brette’s responses to commentary on the paper you linked and see if I’m just being a thickheaded stemlord

That’s fine, I don’t think you’re being an ass at all. Brette is saying that just because there is a correspondence between the measured spike signals and the presented stimuli, that does not qualify the measured signals to be a representation. In order for it to be a representation, it also needs a feature of abstraction. The relation between an image and neural firing depends on auditory context, visual context, behavioural context, it changes over time, and imperceptible pixel changes to the image also substantially alters neural firing. According to Brette, there is little left of the concept of neural representation once you take into account all of this and you’re better off calling it a neural correlate.

hmm thank you for articulating that!

Just over a year ago, on a visit to one of the world’s most prestigious research institutes, I challenged researchers there to account for intelligent human behaviour without reference to any aspect of the IP metaphor. They couldn’t do it, and when I politely raised the issue in subsequent email communications, they still had nothing to offer months later. They saw the problem. They didn’t dismiss the challenge as trivial. But they couldn’t offer an alternative. In other words, the IP metaphor is ‘sticky’. It encumbers our thinking with language and ideas that are so powerful we have trouble thinking around them.

I mean, protip, if you ask people to discard all of their language for discussing a subject they’re not going to be able to discuss the subject. This isn’t a gotcha. We interact with the world through symbol and metaphors. Computers are the symbolic language with which we discuss the mostly incomprehensible function of about a hundred billion weird little cells squirting chemicals and electricity around.

Yeah I’m not going to finish this but it just sounds like god of the gaps contrarianness. We have a symbolic language for discussing a complex phenomena that doesn’t really reflect the symbols we use to discuss it. We don’t know how memory encoding and retrieval works. The author doesn’t either, and it really just sounds like they’re peeved that other people don’t treat memory as an irreducibly complex mystery never to be solved.

Something they could have talked about - Our memories change over time because, afaik, the process of recalling a memory uses the same mechanics as the process of creating a memory. What I’m told is we’re experiencing the event we’re remembering again, and because we’re basically doing a live performance in our head the act of remembering can also change the memory. It’s not a hard drive, there’s no ones and zeroes in there. It’s a complex, messy biological process that arose under the influence of evolution, aka totally bonkers bs. But there is information in there. People remember strings of numbers, names, locations, literal computer code. We don’t know for sure how it’s encoded, retrieved, manipulated, “loaded in to ram”, but we know it’s there. As mentioned, people with some training and recall enormous amounts of information verbatim. There are, contrary to the dollar experiment, people who can reproduce images with high detail and accuracy after one brief viewing. There’s all kinds of weird eiditic memory and outliers.

From what I understand most people are moving towards a system model - Memories aren’t encoded in a cell, or as a pattern of chemicals, it’s a complex process that involves a whole lot of shit and can’t be discrete observed by looking at an isolated piece of the brain. YOu need to know what the system is doing. To deliberately poke fun at the author - It’s like trying to read the binary of a fragmented hard drive, it’s not going to make any sense. You’ve got to load it in to memory so the index that knows where all the pieces of the files are stored on the disk so it can assemble them in to something useful. Your file isn’t “stored” anywhere on the disk. Binary is stored on the disk. A program is needed to take that binary and turn it in to readable information. 'We’re never going to be able to upload a brain" is just whiney contrarian nonesense, it’s god of the gaps. We don’t know how it works now so we’ll never know how it works. So we need to produce a 1:1 scan of the whole body and all it’s processes? So what, maybe we’ll have tech to do that some day. maybe we’ll, you know, skip the whole “upload” thing and figure out how to hook a brain in to a computer interface directly, or integrate the meat with the metal. It’s so unimaginative to just throw your hands up and say “it’s too complicated! digital intelligence is impossible!” Like come on, we know you can run an intelligence on a few pounds of electrified grease. That’s a known, unquestionable thing. The machine exists, it’s sitting in each of our skulls, and every year we’re getting better and better at understanding and controlling it. There’s no reason to categorically reject the idea that we’ll some day be able to copy it, or alter it such a way that it can be copied. It doesn’t violate any laws of physics, it doesn’t require goofy exists only on paper exotic particles. it’s just electrified meat.

Also, if bozo could please explain how trained oral historians and poets can recall thousands of stanzas of poetry verbatim with few or no errors I’d love to hear that, because it raises some questions about the dollar bill “experiment”.

Moreover, we absolutely do have memory. The concept existed before computers and we named the computer’s process after that. We have memories, and computers do something that we easily liken to having memories. Computer memory is the metaphor here

Yeah, it’s a really odd thing to harp about. Guy’s a psychologist, though, and was doing most of his notable work in the 70s and 80s which was closer to the neolithic than it is to modernity. I think this is mostly just “old man yells at clouds” because he’s mad that neuroscience lapped psychology a long time ago and can actually produce results.

Ok, that was great and all, but could you give this short essay again without mentioning any of the brain’s processes or using vowels? If you can’t, it proves your whole premise is flawed somehow.

Right? This is what happens when you let stem people loose without a humanities person to ride herd on them. Any anthropologist would tell you how silly this is.

You don’t remember the text though, and stanzas recounting can sometimes have word substitutions which fit rhythmically.

If I asked you what is 300th word of the poem, you cannot do it. Computer can. If I start with two words of the verse, you could immediately continue. It’s sequence of words with meaning, outside of couple thousands of competitive pi-memorizers, people cannot remember gibberish, try to remember hash number of something for a day. It’s significantly less memory, either as word vector or symbol vector than a haiku.

Re: language, and how far along did the mechanical analogy took us? Until equations or language corresponding to reality are used, you are fumbling about fitting round spheres in spiral holes. Sure you can use ptoleimaic system and add new round components, or you can realize orbits are ellipses

History of science should actually horrify science bros, 300 years scientists firmly believed phlogiston was the source of burning, 100 years ago aether was all around us, and our brains were ticking boxes of gears, 60 years ago neutrinos didn’t have mass, while dna was happily deterministically making humans. Whatever we believe now is scientific truth by historic precedent likely isn’t (correspondence between model and reality), they are getting better all the time (increasing correspondence), but I don’t know perfect scientific theory (maybe chemistry is sorta solved with fiddling around the edges).

Why would that horrify us? That’s how science works. We observe the world, create hypothesis based on those observations, developed experiments to test those hypothesis, and build theories based on whether experimentation confirmed our hypothesis. Phlogiston wasn’t real, but the theory conformed to the observations made with the tools available at the time. We could have this theory of phlogiston, and we could experiment to determine the validity of that theory. When new tools allowed us to observe novel phenomena the phlogiston theory was discarded. Science is a philosophy of knowledge; The world operates on consistent rules and these rules can be determined by observation and experiment. Science will never be complete. Science makes no definitive statements. We build theoretical models of the world, and we use those models until we find that they don’t agree with our observations.

*because confidently relying on the model (in this case informational) prediction like ooh, we could do brain no problem in computer space, you are not exactly making a good scientific prediction. Good scientific prediction is that model is likely garbage, until proven otherwise, and thus shouldn’t be end all be all.

But then if you take information processing model, what it gives you, exactly, in understanding of the brain? The author contention that it is hot garbage framework, it’s doesn’t fit with how the brain works, your brain is not tiny hdd with ram and cpu, and until you think that it is, you will be searching for mirages.

Yes neural networks are much closer (because they are fucking designed to be), and yet even they has to be force fed random noise to introduce fuzziness in responses, or they’ll do the same thing every time. You reboot and reload neural net, it will do the same thing every time. But brain is not just connections of axons, it’s also extremely complicated state of the neuron itself with point mutations, dna repairs, expression levels, random rna garbage flowing about, lipid rafts at synapses, vesicles missing cause microtubules decided to chill for a day, the hormonal state of the blood, the input from the sympathetic neural system etc

We haven’t even fully simulated one single cell yet.

Computers know the 300th word because they store their stuff in arrays, which do not exist in brains. They could also store it in linked lists, like a brain does, but that’s inefficient for the silicon memory layout.

Also, brains can know the 300th word. Just count. Guess what a computer does when it has to get the 300th element of a linked list: it counts to 300.

And computers can count, that’s all they can do as turing machines, we can’t or not that well, feels there is a mismatch here in mediums🤔. If I took 10 people knowing same poem, what are the odds I’ll get same word from all of them?

Is that linked list in the brain can be accessed in all contexts then? Can you sing hip hop song while death metal backing track is playing?

Moreover linked list implies middle parts are not accessible before going through preceding elements, do you honestly think that’s a good analogue for human memory?

Humans have fingers so they can count, so the odds 10 people get the same word should be 100%.

I can plug my ears.

I could implement a linked list connected to a hash map that can be accessed from the middle.

Lol @100 percent.

So which one brain does? Linked list with hash maps then? Final simple computer analogy? Maybe indexed binary tree? Or maybe it’s not that?

When I want to recall a song, I have to remember one part, and then I can play it in my head. However, I can’t just skip to the end.

Linked list

So if second verse plays you can’t sing along until your brain parses through previous verses? I find it rather hard to believe

Do you think humans are so dumb they can’t count to 300?

you can try to find 200th word on physical book page, I suspect on first tries you’ll get different answers. It’s not dumbness, with poem it’s rather complicated counting and reciting (and gesturing, if you use hands), and direct count while you are bored (as in with book), might make mind either skip words, or cycle numbers. We aren’t built for counting, fiddling with complicated math is simpler than doing direct and boring count

If I asked you what is 300th word of the poem, you cannot do it. Computer can

I’m sorry, but this is a silly argument. Somebody might very well be able to tell you what the 300th word of a poem is, while a computer that stored that poem as a

.bmpfile wouldn’t be able to (without tools other than just basic stuff that allows it to show you.bmpimages). In different contexts we remember different things about stuff.Generally you can’t though. of course there are people who remember in different ways, or who can remember pi number to untold digits. Doesn’t mean there are tiny bytes-like engravings in their brain, or that they could remember it perfectly some time from now. Computer can tell what is 300 pixel of that image, while you don’t even have pixels, or fixed visual memory shape. Maybe it’s wide shot of nature, or maybe it’s a reflection of the light in the eyes of your loved one

People don’t think that brains are silicon chips running code through logic gates. At least, the vast majority of people don’t.

The point we’re making here is that both computers and human minds follower a process to arrive at a give conclusion, or count to 300, or determine where the 300th pixel is in a computer. A computer doesn’t do that magically. There’s a program that runs that counts to 300. A human would have to dig out a magnifying glass and count to three hundred. The details are different, but both are counting to 300.

because that’s a task for computer, my second example: giving you two words, it would be slower for computer than arriving at 300 th word, while for you it would be significantly faster than counting.

fundamentally a question is brain a turing machine? I rather think not, but it could be simulated as such with some untold complexity.

Firstly, I want to say it’s cool you’re positively engaging and stimulating a lot of conversation around this.

As far turing machines go - It’s only a concept that’s meant to show a fundamental “level” of computing (“turing completeness”), what a computing device can or cannot achieve. As you agree a turing machine could ‘simulate’ a brain (and we know brains can simulate a turing machine - we invented them!), then conceptually, yes, the brain is computationally equivalent, it is ‘turing complete’, albeit with some randomness thrown in.

some randomness thrown in.

I remain extremely mad at the Quantum jerks for demonstrating that the universe is almost certainly not deterministic. I refuse to be cool about it.

We can simulate a water molecule, does it make a turing machine then? Is single protein? A whole cell? 1000 cells in some invertebrate?

Simulation doesn’t work backwards, it’s not an implied equivalency of turing completeness for both directions. If brain is a turing machine we can map one to one it’s whole function to any existing turing machine, not simulate it with some degree of accuracy.

because that’s a task for computer, my second example: giving you two words, it would be slower for computer than arriving at 300 th word, while for you it would be significantly faster than counting

If your thesis is that human brains do not work perfectly the same way, and not that the analogy with computers in general is wrong, then sure, but nobody disagrees with that thesis, then. I don’t think that any adult alive has proposed that a human brain is just a conventional binary computer.

However, this argument fails when it comes to the thesis of analogy with computers in general. Not sure how it is even supposed to be addressing it.

fundamentally a question is brain a turing machine? I rather think not

Well, firstly, a Turing machine is an idea, and not an actual device or a type of device.

Secondly, if your thesis is that Turing machine does not model how brains work, then what’s your argument here?of course I can’t prove that brain is not a turing machine, I would be world famous if I could. Computers are turing machines yes? They cannot do non-Turing machines operations (decisions or whatever that’s called)

What comparing computer with brain gives to science, I’m asking again for third time in this thread. What insight it provides, aside from mechanizing us to the world? That short term memory exists? a stone age child could tell you that. That information goes from the eyes as bits like a camera? That’s already significantly wrong. That you recall like a photograph read out from your computer? Also very likely wrong

Again, though, this simply works to reinforce the computer analogy, considering stuff like file formats. You also have to concede that a conventional computer that stores the poem as a

.bmpfile isn’t going to tell you what the 300th word in it is (again, without tools like text recognition), just like a human is generally not going to be able to (in the sort of timespan that you have in mind that is - it’s perfectly possible and probable for a person who has memorised the poem to tell what the 300th word is, it would just take a bit of time).Again, we can also remember different things about different objects, just like conventional computers can store files of different formats.

A software engineer might see something like ‘O(x)’ and immediately think ‘oh, this is likely a fast algorithm’, remembering the connection between time complexity of algorithms with big-O notation. Meanwhile, what immediately comes to mind for me is ‘what filter base are we talking about?’, as I am going to remember that classes of finally relatively bounded functions differ between filter bases. Or, a better example, we can have two people that have played, say, Starcraft. One of them might tell you that some building costs this amount of resources, while the other one won’t be able to tell you that, but will be able to tell you that they usually get to afford it by such-and-such point in time.Also, if you are going to point out that a computer can’t tell if a particular image is of a ‘wide shot of nature’ or of a ‘reflection of the light in the eyes of one’s loved one’, you will have to contend with the fact that image recognition software exists and it can, in fact, be trained to tell such things in a lot of cases, while many people are going to have issues with telling you relevant information. In particular, a person with severe face blindness might not be able to tell you what person a particular image is supposed to depict.

I’m talking about visual memory what you see when you recall it, not about image recognition. Computers could recognize faces 30 years ago.

I’m suggesting that it’s not linked lists, or images or sounds or bytes in some way, but rather closer to persistent hallucinations of self referential neural networks upon specified input (whether cognitive or otherwise), which also mutate in place by themselves and by recall but yet not completely wildly, and it’s rather far away picture from memory as in engraving on stone tablet/leather/magnetic tape/optical storage/logical gates in ram. Memory is like a growing tree or an old house is not exactly most helpful metaphor, but probably closer to what it does than a linked list

I’m talking about visual memory what you see when you recall it, not about image recognition

What is ‘visual memory’, then?

Also, on what grounds are you going to claim that a computer can’t have ‘visual memory’?

And why is image recognition suddenly irrelevant here?So far, this seems rather arbitrary.

Also, people usually do not keep a memory of an image of a poem if they are memorising it, as far as I can tell, so this pivot to ‘visual memory’ seems irrelevant to what you were saying previously.I’m suggesting that it’s not linked lists, or images or sounds or bytes in some way, but rather closer to persistent hallucinations of self referential neural networks upon specified input

So, what’s the difference?

which also mutate in place by themselves and by recall but yet not completely wildly

And? I can just as well point out the fact that hard drives and SSDs do suffer from memory corruption with time, and there is also the fact that a computer can be designed in a way that its memory gets changed every time it is accessed. Now what?

Memory is like a growing tree or an old house is not exactly most helpful metaphor, but probably closer to what it does than a linked list

Things that are literally called ‘biological computers’ are a thing. While not all of them feature ability to ‘grow’ memory, it should be pretty clear that computers have this capability.

What is visual memory indeed in informational analogy, do tell me? Does it have consistent or persistent size, shape or anything resembling bmp file?

The difference is neural networks are bolted on structures, not information.

outside of couple of weirdos, people cannot remember gibberish, try to remember hash number of something for a day

Don’t appreciate the ableist language here just because nerudodivergence is inconvenient to your argument. I can fairly easily memorize my credit card number.

I can as well, hash numbers are much worse due to 16 number system.

I was mainly pointing out it’s not typical brain activity to remember info which we don’t perceive as memorable, despite its information contents. Its not a poke at nd folks why would people remembring 10000 digits of pi be nd? but i’ll change it, you are right

the point is that humans have subjective experiences in addition to, or in place of, whatever processes we could describe as information processing. since we aren’t sure what is responsible for subjective experiences in humans, (we understand increasingly more of the physical correlates of conscious experience, but have no causal theories that can explain how the physical brain-states produce subjectivity) it would be presumptuous of us to assume we can simulate it in a digital computer. It may be possible with some future technology, field of science, or paradigm of thinking in mathematics or philosophy or somwthing, but to assume we can just do it now with only trivial modifications or additions to our theories is like humans of the past trying to tackle disease using miasma theory - we simply don’t understand the subject of study enough to create accurate models of it. How exactly do you bridge the gap from objective physical phenomena to subjective experiential phenomena, even in theory? How much, or what kind, of information processing results in something like subjective experiential awareness? If ‘consciousness is illusory’, then what is the exact nature of the illusion, what is the illusion for the benefit of (i.e. what is the illusion concealing, and what is being kept ignorant by this illusion?) and how can we explain it in terms of physics and information processing?

it is just as presumptuous to assume that digital computers CAN simulate human consciousness without losing anything important, as it is to assume that they cannot.

Also, if bozo could please explain how trained oral historians and poets can recall thousands of stanzas of poetry verbatim with few or no errors I’d love to hear that, because it raises some questions about the dollar bill “experiment”.

Through learned, embodied habit. They know it in their bones and muscles. It isn’t the mechanical reproduction of a computer or machine.

Imo I don’t think we could ever “upload a brain” and even if we did, it would be a horrific subjective experience. So much of our sense of self and of consciousness is learned and developed over time through being in the world as a body. Losing a limb has a significant impact on someones consciousness, phantom limbs which can hurt, imagine losing your entire body. This thought experiment is still under the assumption that the brain alone is the entire seat of conscious experience, which is doubtful as this just falls into a mind/body dualism under the idea that the brain is a CPU which could be simply plugged into something else.

Could there be an emergent conscious AI at some point? Perhaps, but as far as we can tell it may very well require a kind of childhood and slow development of embodied experience in a similar capacity to how any known lifeform becomes conscious. Not a human brain shoved into a vat.

This essay is ridiculous, it’s arguing against a concept that nobody with the minutest understanding or interest in the brain has. He’s arguing that because you cannot go find the picture of a dollar bill in any single neuron, that means the brain is not storing the “representation” of a dollar bill.

I am the first to argue the brain is more than just a plain neural network, it’s highly diversified and works in ways beyond our understanding yet, but this is just silly. The brain obviously stores the understanding of a dollar bill in the pattern and sets of neurons (like a neural network). The brain quite obviously has to store the representation of a dollar bill, and we probably will find a way to isolate this in a brain in the next 100 years. It’s just that, like a neural network, information is stored in complex multi-layered systems rather than traditional computing where a specific bit of memory is stored in a specific address.

Author is half arguing a point absolutely nobody makes, and half arguing that “human brains are super duper special and can never be represented by machinery because magic”. Which is a very tired philosophical argument. Human brains are amazing and continue to exceed our understanding, but they are just shifting information around in patterns, and that’s a simple physical process.

This whole thing is incredibly frustrating. Like his guy did draw a representation of a dollar bill. It was a shitty representation, but so is a 640x400 image of a Monet. What’s the argument being made, even? It’s just an empty gotcha. The way that image is stored and retrieved is radically different from how most actual physical computers work, but there is observably an analogous process happening. You point a camera at an object, take a picture, store it to disk, retrieve it, you get an approximation of the object as perceived by the camera. You show someone the same object, they somehow store a representation of that object somewhere in their meat, and when you ask them to draw it they’re retrieving that approximation and feeding that approximation to their hands to draw the imagine. I don’t get why the guy thinks these things are obviously, axiomatically uncomparable.

Meh, this is basically just someone being Big Mad about the popular choice of metaphor for neurology. Like, yes, the human brain doesn’t have RAM or store bits in an array to represent numbers, but one could describe short term memory with that metaphor and be largely correct.

Biological cognition is poorly understood primarily because the medium it is expressed on is incomprehensibly complex. Mapping out the neurons in a single cubic millimeter of a human brain takes literal petabytes of storage, and that’s just a static snapshot. But ultimately it is something that occurs in the material world under the same rules as everything else, and does not have some metaphysical component that somehow makes it impossible to simulate using software in much the same way we’d model a star’s life cycle or galaxy formations, just unimaginable using current technology.

The op its not arguing it has a metaphisical component. Its arguing the structure of the brain is diferent frome the structure of your pc. The metaphor bit is important because all thinking is metaphor with different levels of rigor and abstraction. A faulty metaphor forces you to think the wrong way.

I do disagree with some things, whats a metaphor if not a model? Whats reacting to stimuli if not processing information?

The op its not arguing it has a metaphisical component.

Yes they are. They might scream in your face that they’re not, but the argument they’re making is based not on science and observation but rather the chains of a christian culture they do not feel and cannot see.

A faulty metaphor forces you to think the wrong way.

The Sapir-Whorf hypothesis, if it’s accurate at all, does not have a strong effect.

whats a metaphor if not a model?

To quote the dictionary; “a figure of speech in which a word or phrase is applied to an object or action to which it is not literally applicable.” Which seems to be the real problem, here; Psychologists and philosophers hear someone using a metaphor and think they must literally believe what the psychologist or philosopher believes about the symbol being used.

I think you are rigth. Our dissagrement comes from thinking the metaphor refers to structure rather than just language. Lets say an atomic model were the electrons ar flying around a nucleus formimg shells, is also not literaly aplicable. But we think of it as a useful metaphore because its close enough.

The same should apply to the most sophisticated mathematical models. A useful metaphor should then be a more primitive form of thise process where it illustrates a mechanism. If the mechanism is different from the mechanism in the metaphor then it should be wrong.

If the metaphor is just there to provide names, then you are offcourse rigth that it should not change anything.

Whether the metaphor of computers and brains is correct or not should also have no effect on wether we can simulate a brain in a computer. Computers can after all simulate many things that do not work like computers.

Mapping out the neurons in a single cubic millimeter of a human brain takes literal petabytes of storage, and that’s just a static snapshot

I’ve read long ago that replicating all the functions of a human brain is probably possible with computers around one order of magnitude less powerful than the brain because it’s kind of inefficient

There’s no way we can know that, currently. The brain does work in all sorts of ways we really don’t understand. Much like the history of understanding DNA, what gets written off as “random inefficiency” is almost certainly a fundamental part of how it works.

because it’s kind of inefficient

relative to what and in what sense do you mean this?

I mean for the most extreme example, it takes approximately 1 bazillion operations to solve 1+1

It doesn’t actually. Bees can be trained to do simple arithmetic and they have relatively few neurons.

I hypothesize that it takes fewer watts for a bee brain to do arithmetic than it does for my gpu to simulate an incredibly simple and highly reduced model of a biological neural network to do the same thing.

No I mean the human brain does that, and adding 1 and 1 can be done with like a few wires, or technically two rocks if you wanna be silly about it

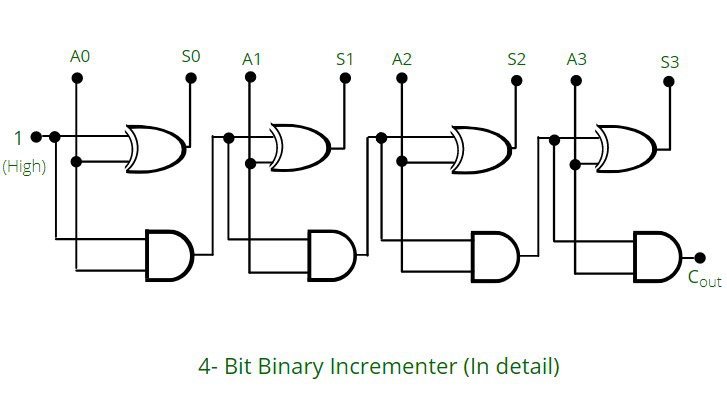

This thing adds 1 to any number from 0 to 15 and it’s tremendously less complex than a neuron, it’s like 50 pieces of metal or whatever

This thing adds 1 to any number from 0 to 15 and it’s tremendously less complex than a neuron, it’s like 50 pieces of metal or whateverSorry for being thick. You mean the human brain does which?

The human brain does that many operations to add 1 and 1

You can probably do it with a pentium processor if you know how. The brain is very slow, and pentium processors are amazingly fast. Its jut that we have no idea.

Resident dumb guy chipping in, but are these two facts mutually exclusive? Assuming both are true, it just means you’d need a computer that’s 1e12x as powerful as our supercomputers to simulate the brain, which is itself 1e13x as powerful as a supercomputer. So we’re still not getting there anytime soon.

*With a very loose meaning of what “powerful” means seeing as the way the brain works is completely different to a computer that calculates in discrete steps.

I could describe it as gold hunter with those sluice thingies, throwing water out and keeping gold, there I described short term memory.

I don’t disagree it’s a material process, I just think we find most complex analogy we have at the time and take it (as author mentions), but then start taking metaphor too far

Yeah but we, if “we” is people who have a basic understanding of neuroscience, aren’t taking it to far. The author is yelling at a straw man, or at lay people which is equally pointless. Neuroscientists don’t think of the mind or the brain it runs on as being a literal digital computer. They have their own completely incomprehensible jargon for discussing the brain and the mind, and if this article is taken at face value the author either doesn’t know that or is talking to someone other than people who do actual cognitive research.

I’ma be honest, i think there might be some academic infighting here. Psychology is a field with little meanginful rigor and poor explanatory power, while neuroscience is on much firmer ground and has largely upended the theories arising from Epstein’s heyday. I think he might be feeling the icy hand of mortality in his chest and is upset the world has moved past him and his ideas.

Also, the gold miner isn’t a good metaphor. In that metaphor information only goes one way and is sifted out of chaos. There’s no place in the metaphor for a process of encoding, retrieving, or modifying information. It does not resemble the action of the mind and cannot be used as a rough and ready metaphor for discussing the mind.

I work in neuroscience and I don’t agree that it is on much firmer ground that psychology. In fact, as some people in the community have noted, the neuroscience mainstream is probably still in the pre-paradigmitic stage (using Kuhn). And believe it or not, a lot of neuroscientists naively do believe that the brain is like a computer (maybe not exactly one, but very close).

Sure there is: encoding is taking sand from the river (taking noise from the world into comprehensible inputs) storage is taking the gold, modifying is throwing some bits out or taking them to the smith.

From the bottom up (and in the middle, if we take partial electro, ultrasound or magnetic stimulation) neuroscience andvances are significant but rather vague. We likely know how on molecular level memory works, but that has jack shit to do with information pipelines, but rather rigorous experiments, or in case of machine human interface more like skilled interpretation of what you see and knowing where to look for it (you can ascribe it to top down approach).

Neuroscientists likely dont, but I think you have rather nicer opinion of tech bros than I do or their ideas among people

My opinion of tech bros is that anyone deserving the label “tech bro” is a dangerous twit who should be under the full time supervision of someone with humanities training, a gun, and orders to use it if the tech bro starts showing signs of independent thought. It’s a thoroughly pathological world view, a band of lethally competent illiterates who think they hold all human knowledge and wisdom. If this is all directed at tech bros I likely didn’t realize it because I consider trying to teach nuance to tech bros about as useful as trying to teach it to a dog and didn’t consider someone in an academic field would want to address them.

Did this motherfucker really write more than 4000 words because nobody told them “all models are wrong but some are useful”?

We really don’t know enough about the brain to make any sweeping statements about it at all beyond “it’s made of cells” or whatever.

Also, Dr. Epstein? Unfortunate.We really do, though. Like we really, really do. Not enough to build one from scratch, but my understanding is we’re starting to be able to read images people are forming in their minds, to locate individual memories within the brain, we’re starting to get a grasp on how at least some of the sub systems of the mind function and handle sensory information. Like we are making real progress at a rapid pace.

We can’t yet really read images people are thinking of, but we have got a very vague technology that can associate very specific brainwave patterns with specific images after extensive training with that specific image on the individual. Which is still an impressive 1% of the way there.

I would love to see some studies that you believe show this. I have seen several over the last decade and come to the conclusion that most of these are bunk or just able to recognize one brain signal pattern, and that that pattern actually is indistinguishable from some others (like lamp and basket look nothing the same, but then the brain map for lamp also returns for bus for some reason).

It’s not a useful endeavor in my opinion, and using computer experience and languages as a model is a pretty shit model, is my conclusion. More predictive possibilities than psychology, but wildly inaccurate and unable to predict it’s innaccuracy. It’s good to push back because it’s accuracy is wildly inflated by stembros

I have seen several over the last decade and come to the conclusion that most of these are bunk or just able to recognize one brain signal pattern

The fmri ones are probably bunk. That said, if you could manage the heinous act of cw: body gore

spoiler

implanting several thousands of very small wires throughout someone’s visual cortex, and record the responses evoked by specific stimuli or instructions to visualize a given stimulus, you could probably produce low fidelity reconstructions of their visual perception

are you familiar with the crimes of Hubel and Weisel?

I am not, and I will look it up in a minute.

But my point is that such a low-fidelity reconstruction, when interpreted through the model of modern computing methods, lacks the accuracy for any application AND, crucially, has absolutely no way to account for and understand its limitations in relation to the intended applications. That last part is a more philosophy of science argument than about some percentage accuracy. It’s that the model has no way to understand its limitations because we don’t have any idea what those are, and discussion of this is limited to my knowledge, leaving no ceiling for the interpretations and implications.

I think a big difference in positions in this thread though is between those talking about how the best neuroscientists in the world think about this, and about those who are more technologists who never reached that level and want to Frankenstein their way to tech-bro godhood. I’m sure the top neuros get this, and are constantly trying to find new and better models. But their publications don’t appear in science journals on the covers

A spectre is haunting Hexbear — the spectre of UlyssesT.

On a more serious note, techbros’ understanding of the brain as a computer is just their wish to bridge subjectivity and objectivity. They want to be privy to your own subjectivity, perhaps even more privy to your own subjectivity than you yourself. This desire stems from their general contempt for humanity and life in general, which pushes them to excise the human out of subjectivity. In other words, if you say that the room is too hot and you want to turn on the AC, the techbro wants to be able to pull out a gizmo and say, “uh aktually, this gizmo read your brain and it says that your actual qualia of feeling hot is different from what you’re feeling right now, so aktually you’re not hot.”

Too bad for the techbro you can never bridge subjectivity and objectivity. The closest is intersubjectivity, not sticking probes into people’s brains.

imagine placing intentional limits on your own desire to understand the universe like this, as though subjective experience isn’t the weirdest fucking thing imaginable and so understanding it is of obvious interest to anybody with any curiosity whatsoever

You understand another person’s subjectivity by talking to them like a normal person, not studying them like a lab rat.

This was a really cool and insightful essay, thank you for sharing. I’ve always conceptualized the mind as a complex physical, chemical, and electrical pattern (edit: and a social context) - if I were to write a sci fi story about people trying to upload their brain to a computer I would really emphasize how they can copy the electrical part perfectly, but then the physical and chemical differences would basically kill “you” instantly creating a digital entity that is something else. That “something else” would be so alien to us that communication with it would be impossible, and we might not even recognize it as a form of life (although maybe it is?).

Would you put your brain in a robot body?

Nails are like candy to robots. And we’ll eat tires instead of licorice.

yes, a robot body with a dumptruck ass (it is an actual dumptruck)

My brain is a pentium overdrive without a fan and I am overheating

I’m glad he mentioned that we aren’t just our brains, but also our bodies and our historical and material contexts.

A “mind upload” would basically require a copy of my entire brain, my body, and a detailed historical record of my life. Then some kind of witchcraft would be done to those things to combine them into the single phenomenal experience of me. Basically:

So, ironically I think the author is falling in to the trap they’re complaining about. They’re talking about an “upload” as somehow copying a file from one computer to another.

Instead, consider transferring your brain to a digital system a little at a time. Old cells die, new cells are created. Do you ever lose subjective continuity during that process? Let one meat cell die and a digital cell grow. Do you stop being yourself once all your brain cells are digital? Was there ever a loss of phenomenalalaogy?

You’ve completely misunderstood their criticism of mind uploading.

The author asserts that you are not really your brain. If you copied your brain into a computer, that hapless brain would immediately dissociate and lose all sense of self because it has become unanchored from your body and your sociocultural and historical-materialist context.

You are not just a record of memories. You are also your home, your friends and family, what you ate for breakfast, how much sleep you got, how much exercise you’re getting on a regular basis, your general pain and comfort levels, all sorts of things that exist outside of your brain. Your brain is not you. Your brain is part of you, probably the most important part, but a computer upload of your brain would not be you.

You are not just a record of memories. You are also your home, your friends and family, what you ate for breakfast, how much sleep you got, how much exercise you’re getting on a regular basis, your general pain and comfort levels, all sorts of things that exist outside of your brain. Your brain is not you.

Embodied cognition. I don’t see this as implying that what we’re doing isn’t computation (or information processing) in some sense. It’s just that the way we’re doing it is deeply, deeply different from how even neural networks instantiated on digital computers do it (among other things, our information processing is smeared out across the environment). That doesn’t make it not computation in the same way that not having a cover and a mass in grams makes a PDF copy of Moby Dick not a book. There are functional, abstract similarities between PDFs and physical books that make them the same “kinds of things” in certain senses, but very different kinds of things in other senses.

Whether they’re going to count as relevantly similar depends on which bundles of features you think are important or worth tracking, which in turn depends on what kinds of predictions you want to make or what you want to do. The fight about whether brains are “really” computers or not obscures the deeply value-laden and perspectival nature of a judgement like that. The danger doesn’t lie in adopting the metaphor, but rather in failing to recognize it as a metaphor–or, to put it another way, in uncritically accepting the tech-bro framing of only those features that our brains have in common with digital computers as being things worth tracking, with the rest being “incidental.”

I think I agree.

One metaphor I quite like is the brain as a ball of clay. Whenever you do anything the clay is gaining deformities and imprints and picking up impurities from the environment. Embodied cognition, right? Obviously the brain isn’t actually a ball of clay but I think the metaphor is useful, and I like it more than I like being compared to a computer. After all, when a calculator computes the answer to a math problem the physical structure of the calculator doesn’t change. The brain, though, actually changes! The computation metaphor misses this.

This is really useful for understanding memory, because every time you remember something you pick up that ball of clay and it changes.

After all, when a calculator computes the answer to a math problem the physical structure of the calculator doesn’t change

What counts as “physical structure?” I can make an adding machine out of wood and steel balls that computes the answer to math problems by shuffling levers and balls around. A digital computer calculates the answer by changing voltages in a complicated set of circuits (and maybe flipping some little magnetic bits of stuff if it has a hard drive). Brains do it by (among other things) changing connections between neurons and the allocation of chemicals. Those are all physical changes. Are they relevantly similar physical changes? Again, that depends deeply on what you think is important enough to be worth tracking and what can be abstracted away, which is a value judgement. One of the Big Lies of tech bro narrative is that science is somehow value free. It isn’t. The choice of model, the choice of what to model, and the choice of what predictive projects we think are worth pursuing are all deeply evaluative choices.

In dwarf fortress you can make a computer out of dwarfs, gates, and levers, and it won’t change unless the dwarfs go insane from sobriety and start smashing stuff.

Great example! Failure modes are really important. Brains and dwarf fortresses might both be computers, but their different physical instations give them different ways to break down. Sometimes that’s not important, but sometimes it’s very important indeed. Those are the sorts of things that get obscures by these dogmatic all-or-nothing arguments.

Isn’t that what this article is about? That “brain as computer” is a value judgement, just like “brain as hydrolic system” and “brain as telegraph” were? These metaphors are all useful, I think the article was just critiquing the inability for people to think of brains outside of the orthodox computational framework.

I’m just cautioning against taking things too far in the other direction: I genuinely don’t think it’s right to say “your brain isn’t a computer,” and I definitely think it’s wrong to say that it doesn’t process information. It’s easy to slide from a critique of the computational theory of mind (either as it’s presented academically by people like Pinker or popularly by Silicon Valley) into the opposite–but equally wrong–kind of position that brains are doing something wholly different. They’re different in some respects, but there are also very significant similarities. We shouldn’t lose sight of either, and it’s important to be very careful when talking about this stuff.

Just as an example:

That is all well and good if we functioned as computers do, but McBeath and his colleagues gave a simpler account: to catch the ball, the player simply needs to keep moving in a way that keeps the ball in a constant visual relationship with respect to home plate and the surrounding scenery (technically, in a ‘linear optical trajectory’). This might sound complicated, but it is actually incredibly simple, and completely free of computations, representations and algorithms.

It strikes me as totally wrong to say that this process is free of computation. The computation that’s going on here has interesting differences from what goes on in a ball-catching robot powered by a digital computer, but it is computation.

Your analogy reminds me a bit of the Freud essay on the mystical writing pad

That should be a red flag to treat it with caution. Freud was a crank and his only contribution to psychology was being so wrong it inspired generations of scientists to debunk him.

What is the ratio of meat parts to machine parts at which point “that chair you once sat on” or “the dust bunnies you haven’t swept up yet even though you keep meaning to” are no longer materially a part of you and you subsequently lose your self as a result?

If someone loses their leg and gets a prosthetic does this alienate them from the birds that woke them up last week?

If someone loses their leg and gets a prosthetic does this alienate them from the birds that woke them up last week?

This trope in cyberpunk pisses me off to no end. Writers just out there saying “using a wheelchair makes you less human and the more wheelchair you use the less human you become.”

Like people are out there, living, surviving, retaining their sanity, in comas where they have no access to sensory input. Those people wake up and they’re still human after living in the dark for years. People who have no sensation or control below the neck go right on living without turning in to psycho-murderers because they’re so alienated from humanity because they can’t feel their limbs. How is it that people somehow lose their humanity and turn in to monsters just because they’ve got some metal limbs? You can cut out half of someone’s brain and there will still be a person on the other side. They might be pretty different, but there’s still a person there. people survive all kinds of bizarre brain traumas.

How is it that people somehow lose their humanity and turn in to monsters just because they’ve got some metal limbs?

Corporate bloatware/adware in cyber-limbs. That’s the explanation in my cyberpunk setting.

Yeah. At least there’s been a movement in the genre towards “ok, it’s not cybernetic implants in general, it’s chronic pain from malfunctioning or poorly calibrated implants, it’s the trauma of a violent and alienated society intersecting with people who are both suffering and who have a massively increased material capacity to commit violence, etc” there. Like Mike Pondsmith himself has still got a bit of a galaxy brain take on it, but even he’s moved around to something like “cyberpsychosis is a confluence of trauma and machines that are actively causing pain, nervous system damage, etc and which need a cocktail of drugs to manage which also have their own health and psychiatric consequences.”

I don’t see that as an improvement or a recognition of what is wrong with “cyberpsychosis” and related concepts. People live with severe trauma, severe chronic pain, and severe psychiatric problems and manage to keep it together. Pondsmith is making up excuses to keep “wheelchairs make you evil” in his game instead of recognizing the notion for what it is and discarding it.

I think it’s a good example of ingrained, reflexive ableism. It’s a holdover from archaic 20th century beliefs about disabled people being less human, less intelligent, less capable. Cybernetics are not a good metaphor for capitalist alienation or any other kind of alienation. They are, no matter how you cut it, aids and accomodations for disability. You just cannot say that cyberware makes you evil without also saying that disabled people using aids in your setting are alienating themselves from humanity and becoming monsters. If you wanted to argue that getting wired reflexes, enhanced musculature, getting your brain altered so you can shut off empathy or fear, things that you do voluntarily to make yourself a better tool for capitalism, gradually resulted in alienation, go for it.

Ghost in the Shell does a good job with that. Kusanagi isn’t alienated from humanity because she’s a cyborg, but her alienation from humanity grows from questioning what it means for her to be a cyborg, a brain in a jar. She’s got super-human capabilities - she’s massively stronger and more resilient, she’s a wizard hacker augmented with cyberware that let’s her directly interface with the net in a manner most people simply don’t have the skills for. Her digestive and endocrine systems are under her conscious fine control. These things don’t make her an alien or a monster, they create questions in her mind about her identity, her personhood, and how she can even relate to normal humans as her perspective and understanding of the world moves further and further away from them.

And this isn’t a bad thing. It doesn’t lead her to self-destruction or a berserk rage. Instead it leads her to growth, change, and evolution. She ambiguously dies, but in dying brings forth new life. Her new form is not an enemy of humanity or a threat, but instead a new kind of being that is a child or inheritor of humanity, humanity growing past it’s limitations to seek new horizons of potential.

The key difference is Kusanagi has agency. The cybernetics don’t force her towards alienation. They don’t damage her mind and turn her in to a monster with no agency. Kusanagi’s alienation grows from her own lived experience, her own thoughts and learning. They grow from her interactions with the people in her life and her day to day experiences. Her cybernetics are an important part of that experience, but she is in control of her cyberbody. It is not controlling her and turning her in to a hapless victim.

Basically; the Cyberpunk paradigm says that using a wheelchair makes you violent and evil. The GitS paradigm says using a wheelchair makes you consider the world from a different perspective. In the former disability, both physical and mental (false dichotomy I know) is villainized and demonized. In the latter disability is a state that creates separation from “normal” people in a way that reflects the experiences of real disabled people, but is otherwise neutral.

Pondsmith is making up excuses to keep “wheelchairs make you evil” in his game instead of recognizing the notion for what it is and discarding it.

At this point as I understand it his take is “alienation/isolation, trauma from a violent society, and denial of access to necessary medical care can eventually break someone, and someone who can bench press a car and has a bunch of reflex enhancers jacked directly into their spine is more likely to lash out in a dangerous way when their back’s to the wall, they think they’re going to die, and they panic,” with a whole lot of emphasizing social support networks as being important for surviving and enduring trauma like that.

It’s still not as good a take as “cyberpsychosis isn’t real, it’s just a bullshit diagnosis applied to people pushed past the brink by their material circumstances, acting in the way that a society that revolves around violence has ultimately taught them to act, who then just double down on it because they know they’re going to be summarily executed by the police who have no interest in deescalation or trying to take them alive, compounded with the fact that they can bench press a car and react to bullets fast enough to simply get out of the way, at least for a while” would be, but it’s earnest progress from someone who’s weirdly endearing despite being an absolute galaxy brained lib.

Weirdly, Cyberpunk 2077 seems to have had a better take on it than Pondsmith himself, with “cyberpsychos” mostly being just people with an increased capacity for violence dealing with intolerable material conditions until they fight back a little too hard against a real or perceived threat, with one who’s not even on a rampage and instead is just a heavily augmented vigilante hunting down members of a criminal syndicate that had murdered someone close to him. The police chatter also has the player branded a cyberpsycho when you get stars, reflecting the idea that it’s more a blanket term applied to anyone with augments who’s doing a violent crime than a real thing.

I have no idea, but neither do you, which is kind of the point. How much of you is your brain, your body, your context?

Even if we can’t put a number on it, I think it’s trivial to assert that you are not just your brain. So, if you copied only your brain into some kind of computer, there would be parts of you that are missing because you left them behind with your meat.

neither do you

Sure I do: that ratio does not exist, and no you don’t get alienated from your material context if you have a prosthetic limb. We’re made up of parts that perform functions, and we can remain ourselves despite the loss of large chunks of those - so long as someone remains alive and the brain keeps working they’re still themself, they’re still in there.

If someone could keep the functions of the brain ongoing and operating on machine bits they’d still be in there. It may be a transformative and lossy process, it may be unpleasant and imperfect in execution, but the same criticism applies to existing in general: at any point you may be forced out of your normal material context by circumstance and this is traumatic, you may lose the healthy function of large swathes of your body and this is traumatic, you may suffer brain damage and this is traumatic, you’re constantly losing and overwriting memories and this can be normal or it can be traumatic, etc, but through it all you are you and you’re still in there because ontologically speaking you’re the ongoing continuation of yourself, distinct from all the component parts that you’re constantly losing and replacing.

Sure I do: that ratio does not exist, and no you don’t get alienated from your material context if you have a prosthetic limb.

Does body dysmorphic disorder not exist? Or phantom limb? A full body prosthetic would undoubtedly be a difficult adjustment!

And would an upload be a person, legally speaking? Would your family consider the upload to be a person? That’s pretty alienating.

And people survive all of that stuff, and are still people. I really don’t understand what you’re getting at here.

I didn’t say “you are perfectly happy and have no material problems whatsoever dealing with a traumatic injury and imperfect replacement,” but rather that this doesn’t represent some sort of fundamental loss of self or unmooring from material contexts. People can lose so much, can be whittled away to almost nothing all the way up to the point where continued existence becomes materially impossible due to a loss of vital functions, but through that they still exist, they remain the same ongoing being even if they are transformed by the trauma and become unrecognizable to others in the process.

And would an upload be a person, legally speaking? Would your family consider the upload to be a person? That’s pretty alienating.

If you suffer a traumatic brain injury and lose a large chunk of your brain, that’s going to seriously affect you and how people perceive you, but you’re still legally the same person. If instead that lost chunk was instead replaced with a synthetic copy there may still be problems but less so than just losing it outright. So if that continues until you’re entirely running on the new synthetic replacement substrate, then you have continued to exist through the entire process just as you continue to exist through the natural death and replacement of neurons - for all we know copying and replacing may not even be necessary compared to just adding synthetic bits and letting them be integrated and subsumed into the brain by the same processes by which it grows and maintains itself.

A simple copy taken like a photograph and then spun up elsewhere would be something entirely distinct, no more oneself than a picture or a memoir.

This is just god of the gaps. “we don’t know so it’s not possible”. Saying “just copy the brain” is a reductive understanding of what’s being discussed. If we can model the brain then modelling the endocrine system is probably pretty trivial.

I didn’t read it as being impossible? I think you could upload a human mind into a computer, but it can’t just be their brain. Your mind, your phenomenal self, is more than just your brain because your brain isn’t just a hard drive. That’s what I took away from the article, anyway.

You are some mix of your brain, your body, and your context. Whatever upload magic exists would need all of that to work.

Aight I think we might be stuck in a semantics disagreement here. I’m using brain to mean the actual brain organ plus whatever other stuff is needed to support brain function - the endocrine system, nervous system, whatever. The physical systems of cognition in the body. i do not mean literally only the brain organ with no other systems.

This really sounds like mind-body dualism. Setting aside the body, which you could just stick the new brain back in your body and hook the nervous system back in, why would you be cut off from your sociocultural and historical material context? Go home. pet your cat with your robot arms. Hang out with your family, eat breakfast. Wherein lies the problem? All of those things exist inside your brain. Your brain is taking the very shoddy, very poor information being supplied by your sensory organs and assembling it in to approximations of what’s happening around you. We don’t interact with the world directly. Our interaction is moderated by our sensory organs and lots of non-conscious components of the mind, and they’re often wrong. If you hook up some cameras and a nervous system and some meat to this theoretical digital mind it has all the things a meat mind has. The same sensory information.

Like, really, where is the problem? A simulated brain is a brain is a brain. Put the same information in you’re going to get the same results out. Doesn’t matter if the signals are coming from a meat suit or a mathematical model of a meat suit, as long as they’re the right signals.

Okay, imagine your family does not recognize your mind upload as a person. That’s not hard to imagine, I bet most people today would struggle with that kind of barrier. Maybe they treat it like a memorial of a dead person and do not treat it like a member of the family, maybe they shun it because it’s creepy and don’t want anything to do with it, maybe they try to destroy it in the memory of who you were.

That’s your sociocultural and historical material context. The mind upload would become a new person entirely because of the insurmountable differences between being made of meat and being made of data. That upload could never be the person you are now, because the upload becomes someone different as soon as they stop being made of meat and stop eating hot chip and stop paying taxes etc.

Okay, imagine your family does not recognize your mind upload as a person.

That’s the day to day lived experience of like half the queer people in the world. I rejected part of my family and kicked them out of my life. i don’t have to imagine it, I’ve lived it.

You seem to have an assumption that some kind of digital transfer of consciousness would not physically interact with the world. You could just plug them in to a body. If you can run an entire brain on a computer hooking it in to a meat suit sounds fairly trivial. You can go right on eating hot chip and paying taxes. I think we’re operating from different perspectives here and I’m not sure what yours is. A digital mind could have a digital body and eat digital hot chip and they’d definitely be forced to pay taxes.

That’s the day to day lived experience of like half the queer people in the world. I rejected part of my family and kicked them out of my life. i don’t have to imagine it, I’ve lived it.

Okay, and are you the same person you were before that? I’ve experienced that too, and I don’t think I am the person I was before. I think I became someone else in order to survive. If I could somehow go back in time and meet myself we would hardly recognize each other!

they’d definitely be forced to pay taxes.

No, they’d be property and someone else would have to pay taxes for owning them.

When I talk about the sociocultural context I’m talking about the fact that society would not treat the mind upload as a person. We have not developed far enough along our historical material context to recognize uploads as people. The trauma of that experience would necessitate becoming a different person entirely, and thus, they’re no longer an upload of you. That’s the problem with talking about mind uploads.

You are not just your brain (and its supporting structures). You are your context.

Let one meat cell die and a digital cell grow. Do you stop being yourself once all your brain cells are digital?

Real Ship of Theseus Hours (kinda).

Yeah, pretty much. I think people are stuck in this idea that we’d somehow take a picture of the mind all at once and there’d be a loss of continuity and then you’re not the same person, but like, we’ve already got the intelligence making machine, why not just swap the parts out with maths one at a time until it’s all maths? Why would that be a problem? I sincerely think that a lot of people who object to this either aren’t really thinking of the concept beyond what’s presented in pop sci-fi or are still stuck on the idea of an incorporeal mind separate from material reality.

I think the issue for some people might be that parts does not equal a whole, or to paraphrase, “a brain is more than the sum of its parts”. A person upon hearing the concept of brain uploading might ask “is this upload really me, or would the digital brain be an AI copy mimicking me?” Something could get lost in the translation, but that’s why I mentioned the Ship of Theseus. As far as some people are concerned, something is lost, BUT they would have trouble identifying what that something is (EDIT: other than actual organic gray matter). To the brain itself, it will think of itself as being the original, such that nothing was lost.

Yeah, it’s the illusion of self and continuity of consciousness thing. People assume that any upload would be necessarily destructive and that there’d be a loss of continuity. No one ever seems to think “well, wait, why couldn’t I be awake for the whole operation in a way that preserves subjective continuity?”

In the ship of theseus, brain being replaced cell by cell concept you don’t get your head chopped off and imaged, you’re very gradually shifting what hardware your mind is running on. The lights never go out, there’s never a drastic, shocking moment of change, you never wake up in a jar.

That still relies on being able to digitally emulate wet cognition, which may not be possible.

Sure, maybe it’s not, but afaik there’s nothing that rules out the possibility. We can’t do it now, but I’m not aware of any physical laws or limits that make it impossible for us to do it at some point when we’ve got a better understanding of what’s happening and a lot of silicon to run the maths on.

Yeah maybe, but I don’t think we’re figuring it out anytime soon

I really like the description of evolution outcomes as “totally bonkers bs”

I genuinely don’t know how to explain what evolution is as a process most of the time “imagine a drop of water seeking the sea, but the drop of water really wants to fuck, and sometimes it gets hit by an asteroid?”

“Complex self-replicating systems reversing local entropy while undegoing variation caused by entropy until they lose equilibrium and can no longer self replicate” ?

It’s an incredibly simple concept. Water seeks the sea. And it’s also an incredibly complicated, obtuse concept. I think a huge part of the difficulty is cultural - we anthropomorphize and ascribe agency to everything, and evolution is the absolute and total absence of agency, the pure action of entropy

If our brains were computers we wouldn’t have computers.

here are some more relevant articles for consideration from a similar perspective, just so we know its not literally just one guy from the 80s saying this. some cite this article as well but include other sources. the authors are probably not ‘based’ in a political sense, i do not condone the people but rather the arguments in some parts of the quoted segments.

https://medium.com/@nateshganesh/no-the-brain-is-not-a-computer-1c566d99318c

Let me explain in detail. Go back to the intuitive definition of an algorithm (remember this is equivalent to the more technical definition)— “an algorithm is a finite set of instructions that can be followed mechanically, with no insight required, in order to give some specific output for a specific input.” Now if we assume that the input and output states are arbitrary and not specified, then time evolution of any system becomes computing it’s time-evolution function, with the state at every time t becoming the input for the output state at time (t+1), and hence too broad a definition to be useful. If we want to narrow the usage of the word computers to systems like our laptops, desktops, etc., then we are talking about those systems in which the input and output states are arbitrary (you can make Boolean logic work with either physical voltage high or low as Boolean logic zero, as long you find suitable physical implementations) but are clearly specified (voltage low=Boolean logic zero generally in modern day electronics), as in the intuitive definition of an algorithm….with the most important part being that those physical states (and their relationship to the computational variables) are specified by us!!! All the systems that we refer to as modern day computers and want to restrict our usage of the word computers to are in fact our created by us(or our intelligence to be more specific), in which we decide what are the input and output states. Take your calculator for example. If you wanted to calculate the sum of 3 and 5 on it, it is your interpretation of the pressing of the 3,5,+ and = buttons as inputs, and the number that pops up on the LED screen as output is what allows you interpret the time evolution of the system as a computation, and imbues the computational property to the calculator. Physically, nothing about the electron flow through the calculator circuit makes the system evolution computational. This extends to any modern day artificial system we think of as a computer, irrespective of how sophisticated the I/O behavior is. The inputs and output states of an algorithm in computing are specified by us (and we often have agreed upon standards on what these states are eg: voltage lows/highs for Boolean logic lows/highs). If we miss this aspect of computing and then think of our brains as executing algorithms (that produce our intelligence) like computers do, we run into the following -

(1) a computer is anything which physically implements algorithms in order to solve computable functions.

(2) an algorithm is a finite set of instructions that can be followed mechanically, with no insight required, in order to give some specific output for a specific input.

(3) the specific input and output states in the definition of an algorithm and the arbitrary relationship b/w the physical observables of the system and computational states are specified by us because of our intelligence,which is the result of…wait for it…the execution of an algorithm (in the brain).

Notice the circularity? The process of specifying the inputs and outputs needed in the definition of an algorithm, are themselves defined by an algorithm!! This process is of course a product of our intelligence/ability to learn — you can’t specify the evolution of a physical CMOS gate as a logical NAND if you have not learned what NAND is already, nor capable of learning it in the first place. And any attempt to describe it as an algorithm will always suffer from the circularity.

And yet there is a growing conviction among some neuroscientists that our future path is not clear. It is hard to see where we should be going, apart from simply collecting more data or counting on the latest exciting experimental approach. As the German neuroscientist Olaf Sporns has put it: “Neuroscience still largely lacks organising principles or a theoretical framework for converting brain data into fundamental knowledge and understanding.” Despite the vast number of facts being accumulated, our understanding of the brain appears to be approaching an impasse.