I made this and thought you all might enjoy it, happy hacking!

I tried running this with some output from a Wizard-Vicuna-7B-Uncensored model and it returned

('Human', 0.06035491254523517)So I don’t think that this hits the mark, to be fair, I got it to generate something really dumb but a perfect LLM detection tool will likely never exist.

Good thing is that it didn’t false positive my own words.

Below is the output of my LLM, there’s a decent amount of swearing so heads up

Edit:

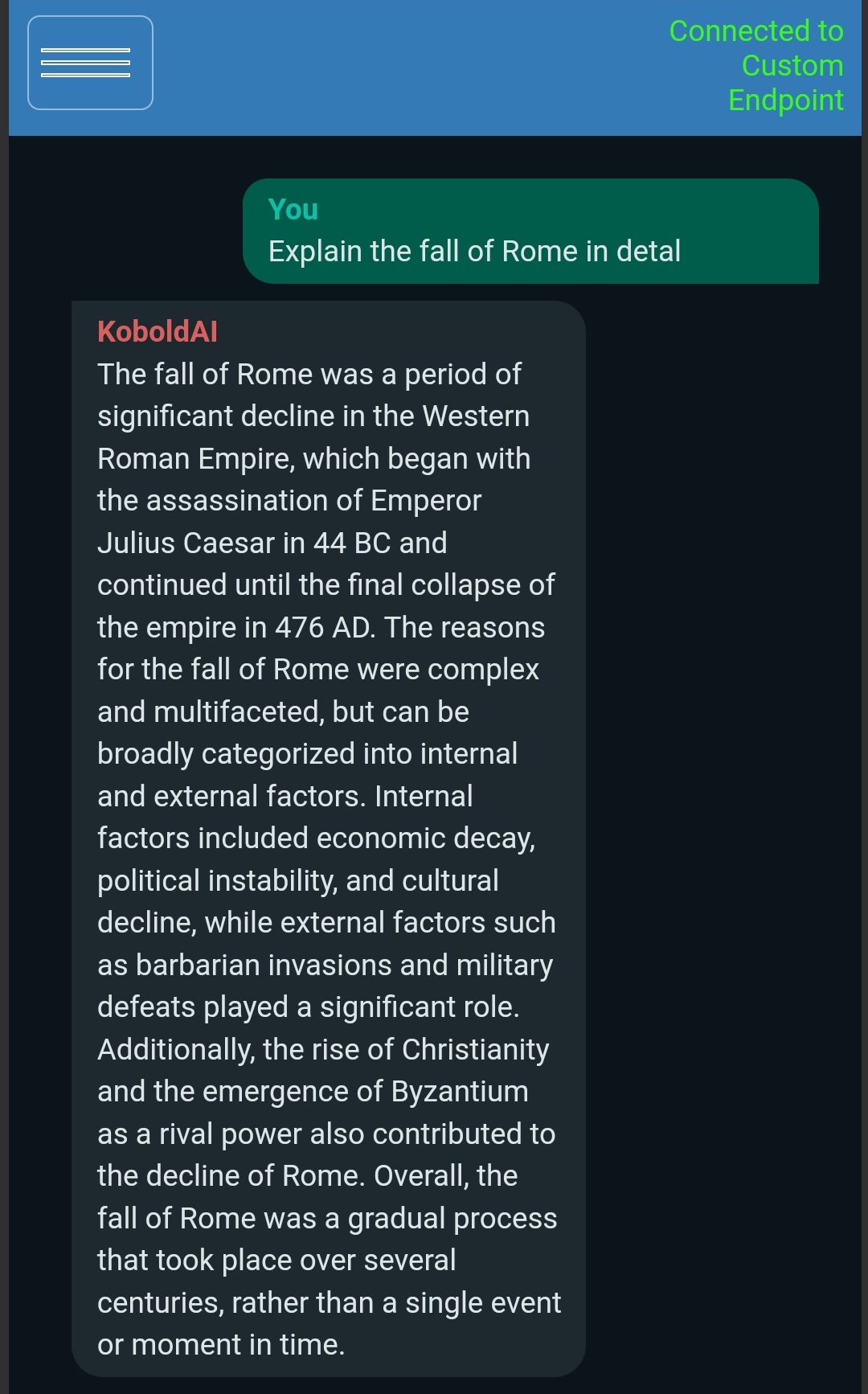

Tried with a more sensible question and still got a false negative

('Human', 0.03917657845587952)

Lmao what the hell. That was hilarious.

Super cool approach. I wouldn’t have guessed it would be that effective if someone had explained it to me without the data.

I’m curious how easy it is to “defeat”. If you take an AI generated text that is successfully identified with high confidence and superficially edit it to include something an LLM wouldn’t usually generate (like a few spelling errors), is that enough to push the text out of high confidence?

I ask because I work in higher ed, and have been sitting on the sidelines watching the chaos. My understanding is that there’s probably no way to automate LLM detection to a high enough certainty for it to be used in an academic setting as cheat detection, the false positives are way too high.

ZipPy is much less robust to defeat attempts than larger model-based detectors. Earlier I asked ChatGPT to write in the voice of a highschool student and it fooled the detectors. The web-UI let’s you add LLM-generated text in the style that you’re looking at to improve the accuracy of those types of content.

I don’t think we’ll ever be able to detect it reliably enough to fail students, if they co-write with a LLM.

That is a genius approach, though a big challenge is probably going to be to select the correct corpus

It’s not if you’re aware of Generative Adversarial Network, it’s a losing game for the detector, because anything you use to try and detect generated content would only strengthen the neural net model itself, so by making a detector, you’re making it better at evading detection. At least in language model, they are looking at how GAN can be applied in language model.