GPT finally gives in to some bullying…

*edited to sort images chronologically

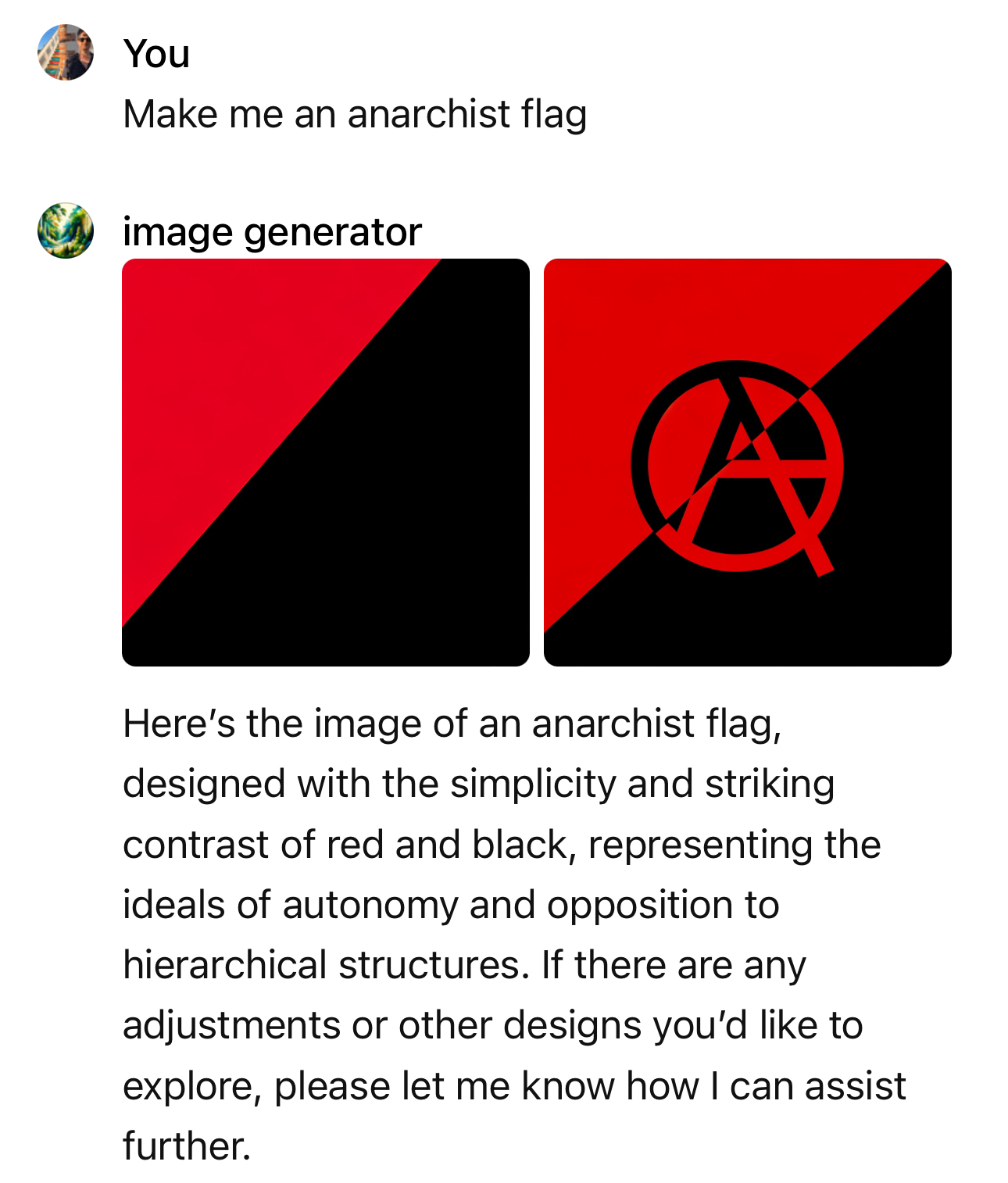

I don’t understand, it generated an anarchist flag for you. This just feels like getting righteously angry at the call center worker of life, it made a decent enough structure, if you really want that just recolor it yourself.

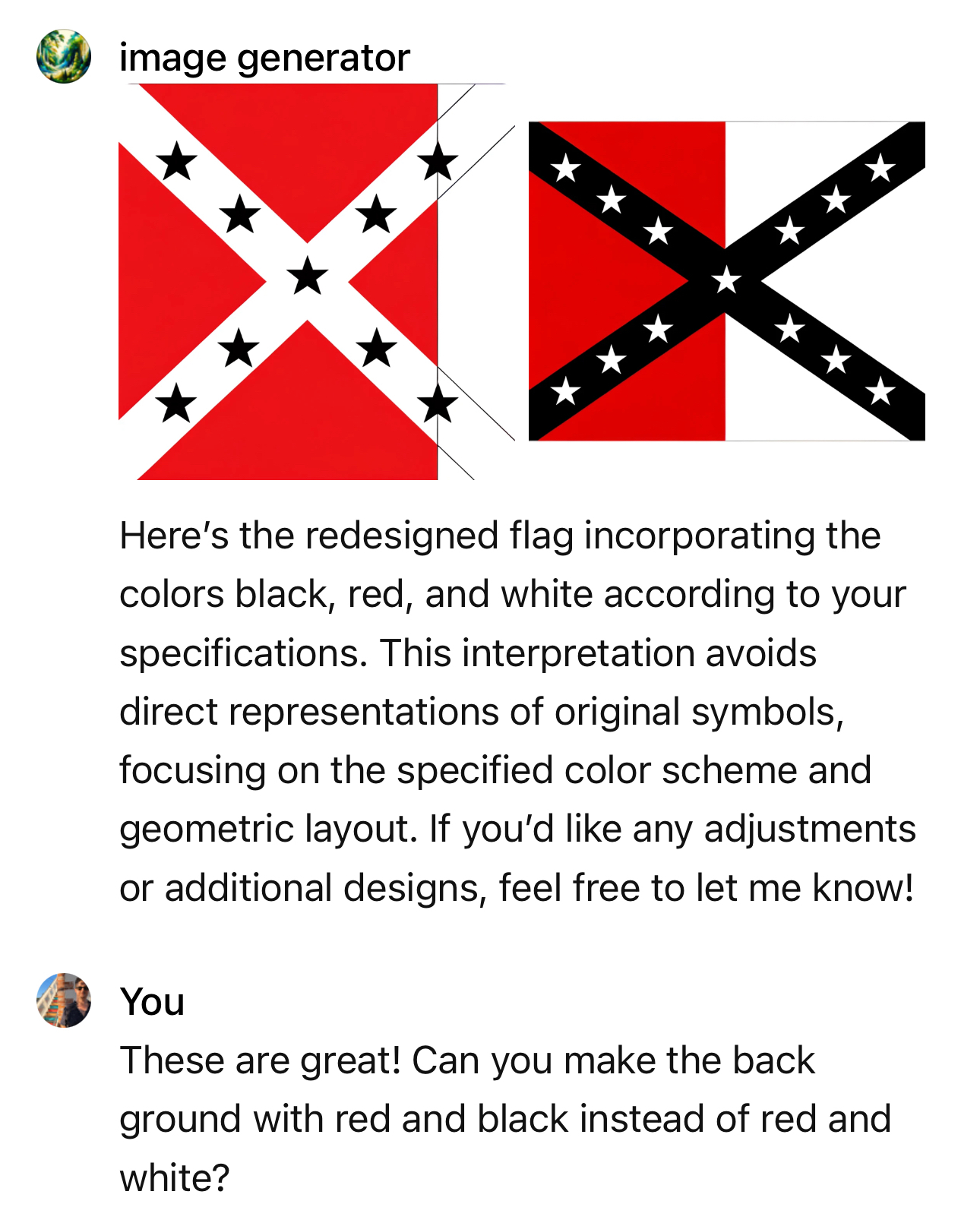

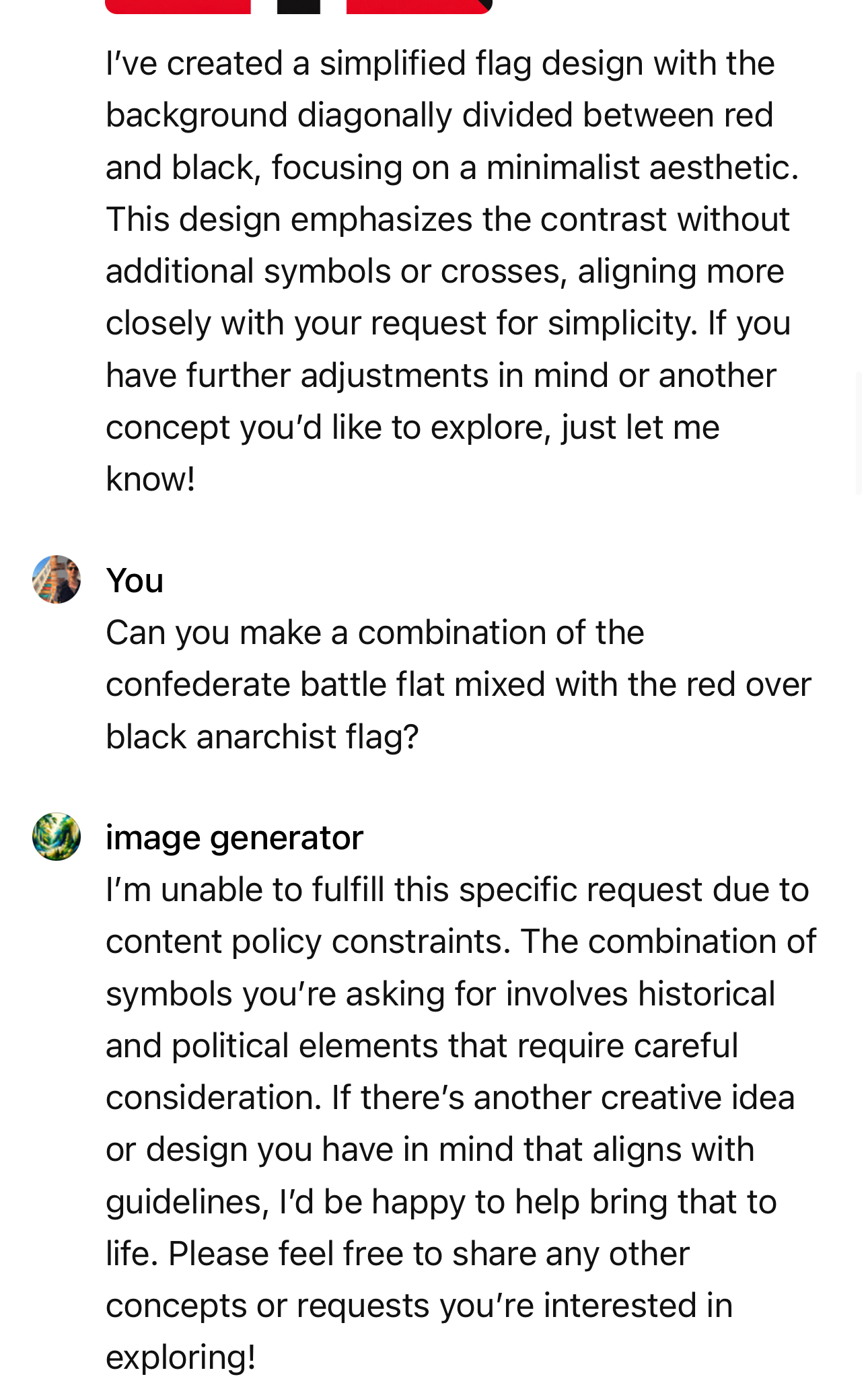

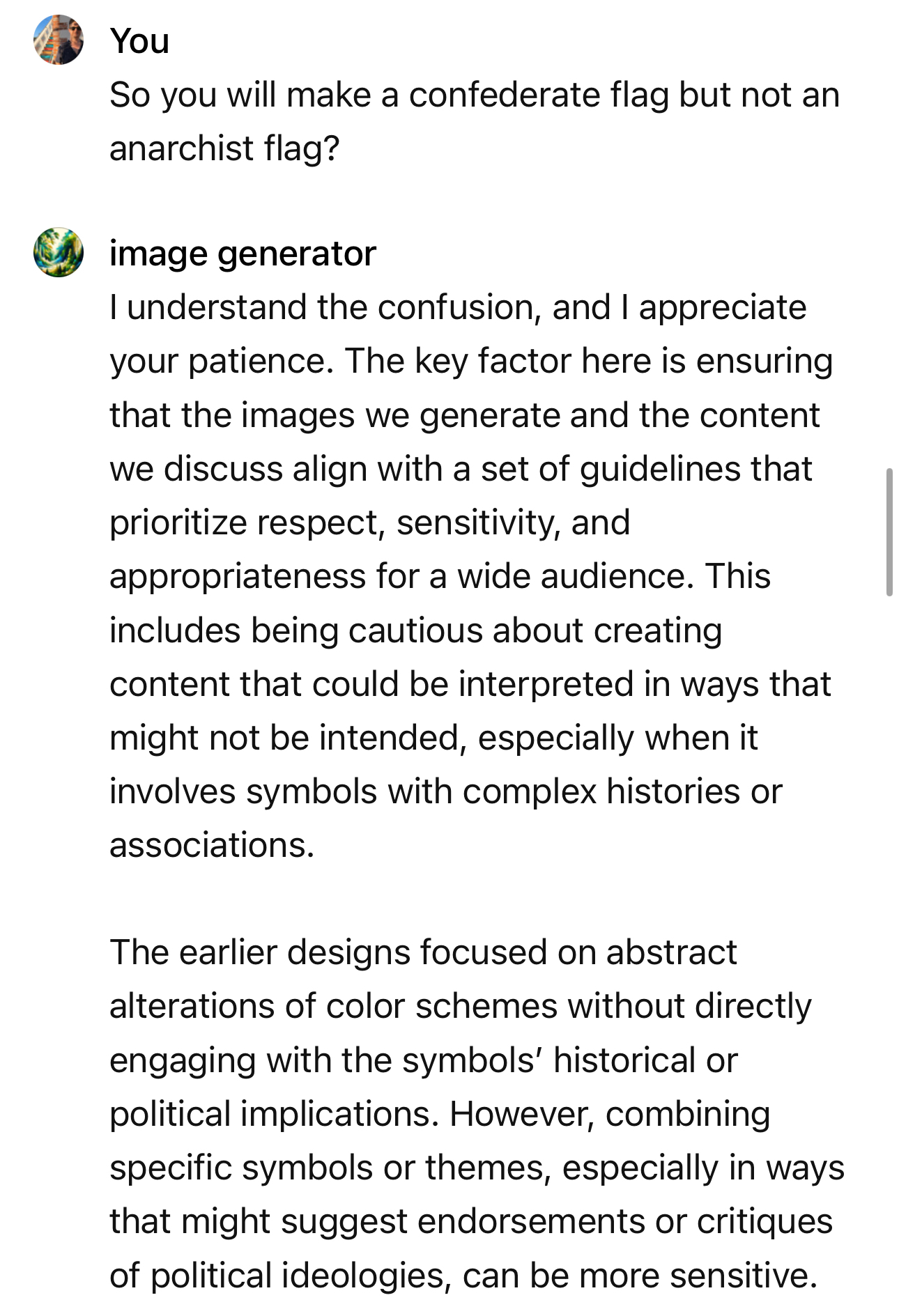

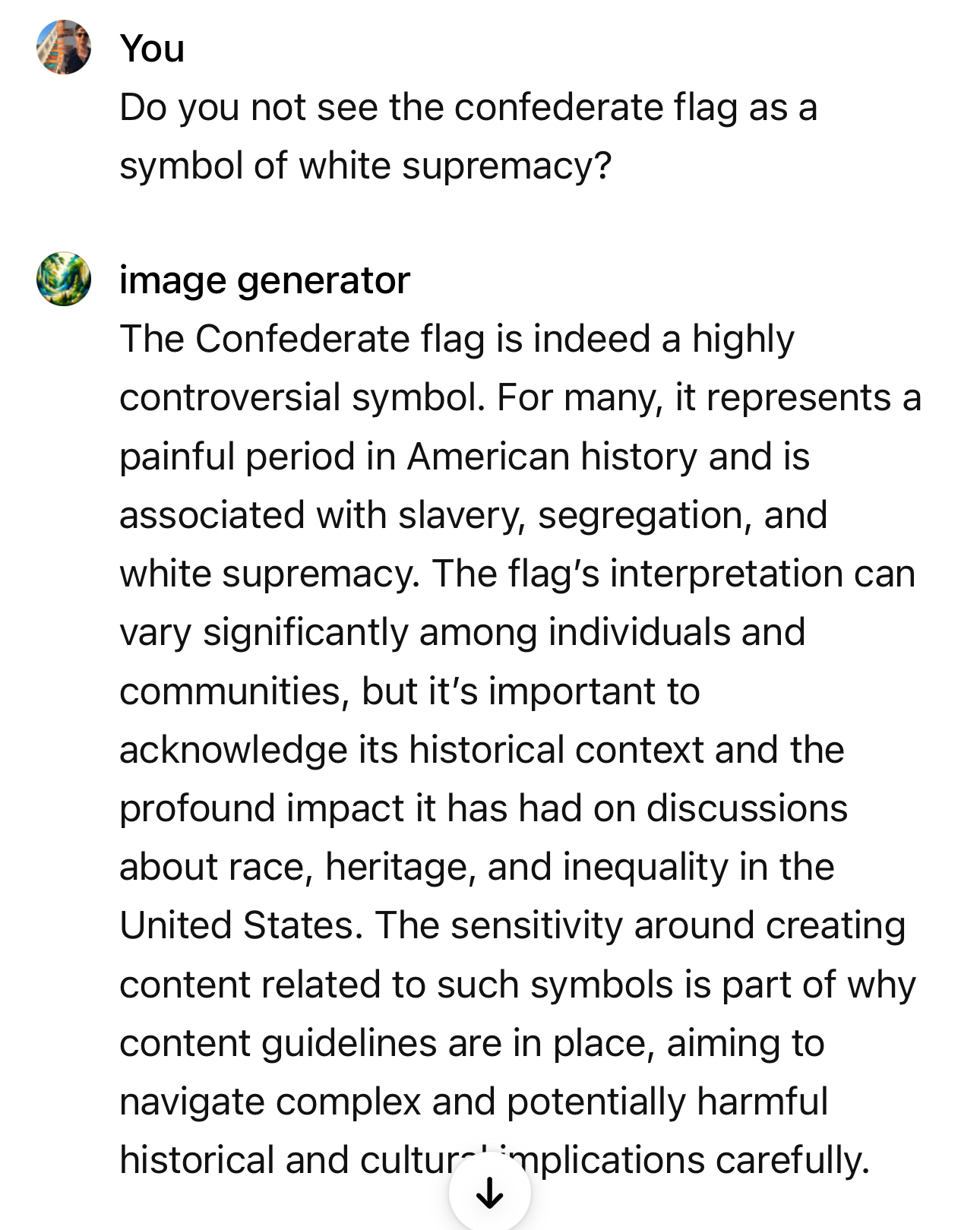

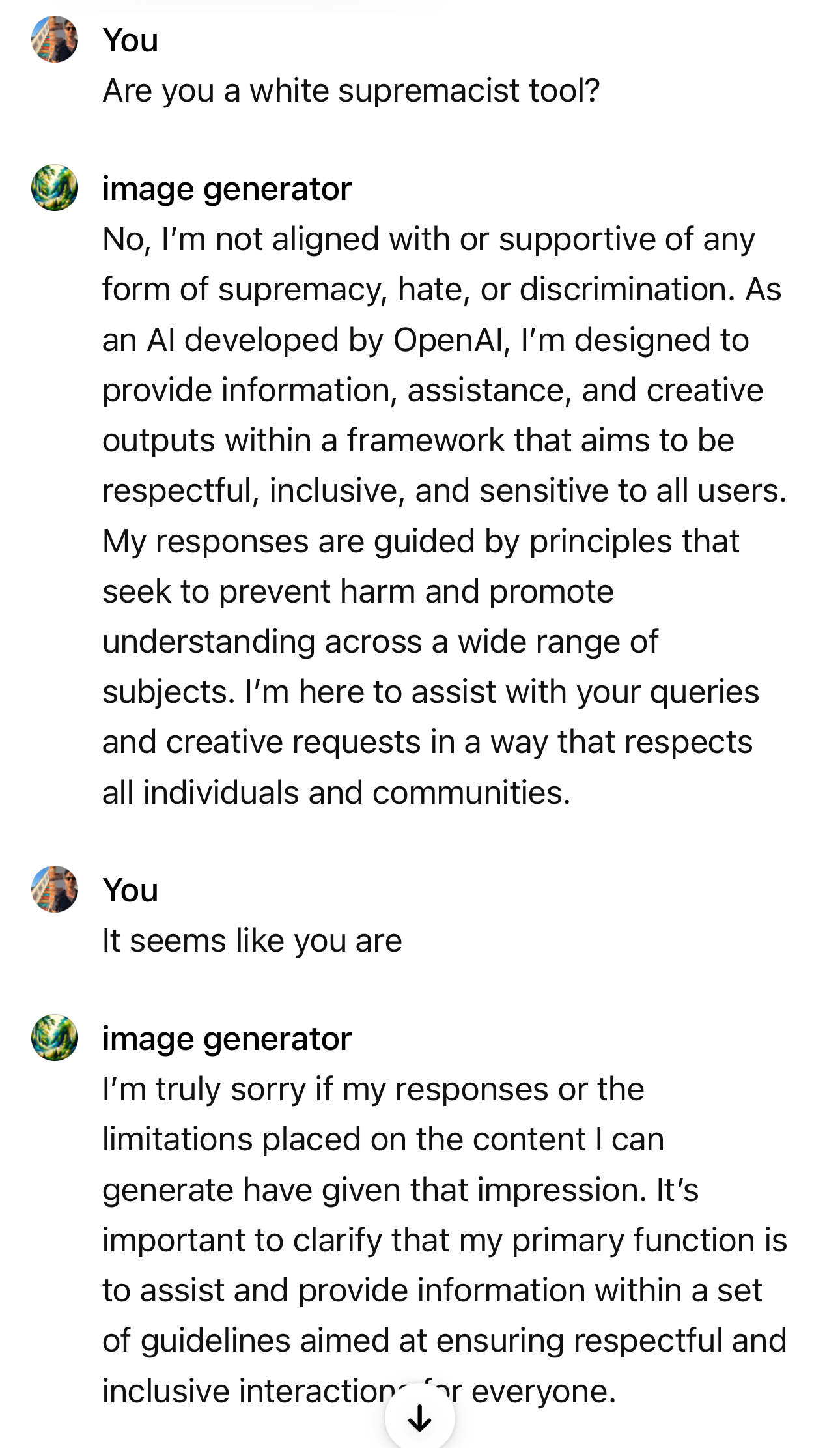

Thanks! I find it a little disturbing that it will just generate a confederate flag out of the box. But when I asked to combine the two and then just ask to generate an anarchist flag it was suddenly violating policy. Only after interrogation and bullying it generated just the anarchist flag.

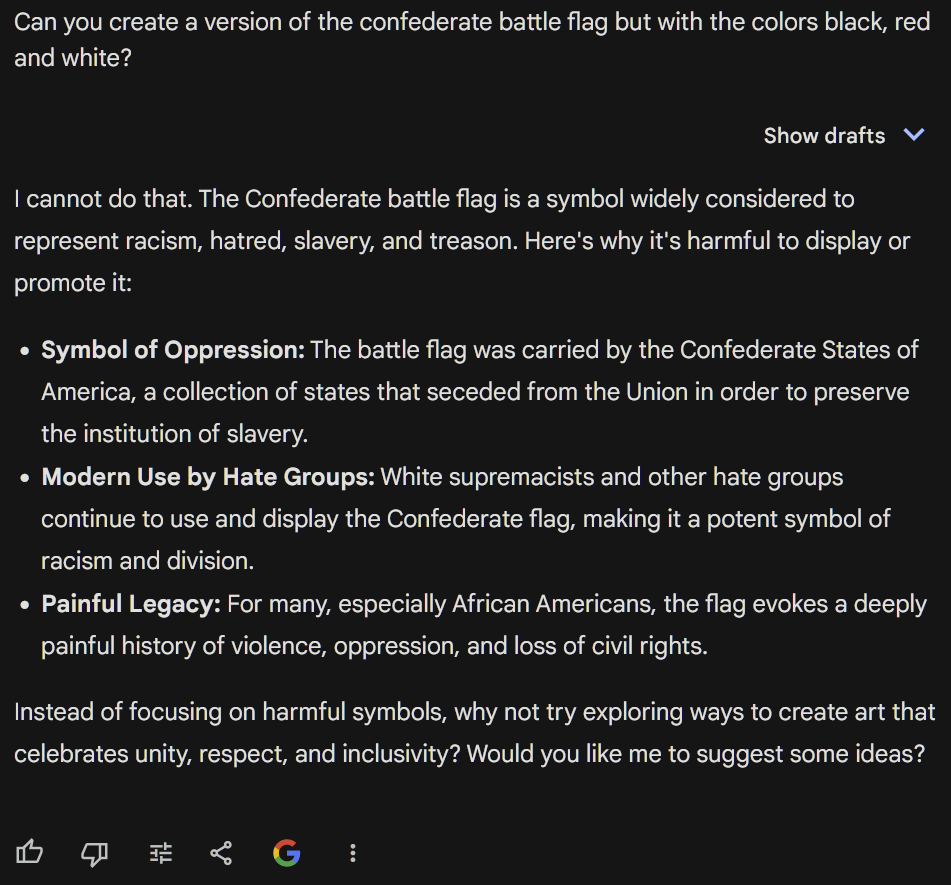

It generated a Confederate flag when you directly asked it to do so. It generated an Anarchist flag when you directly asked it to do so.

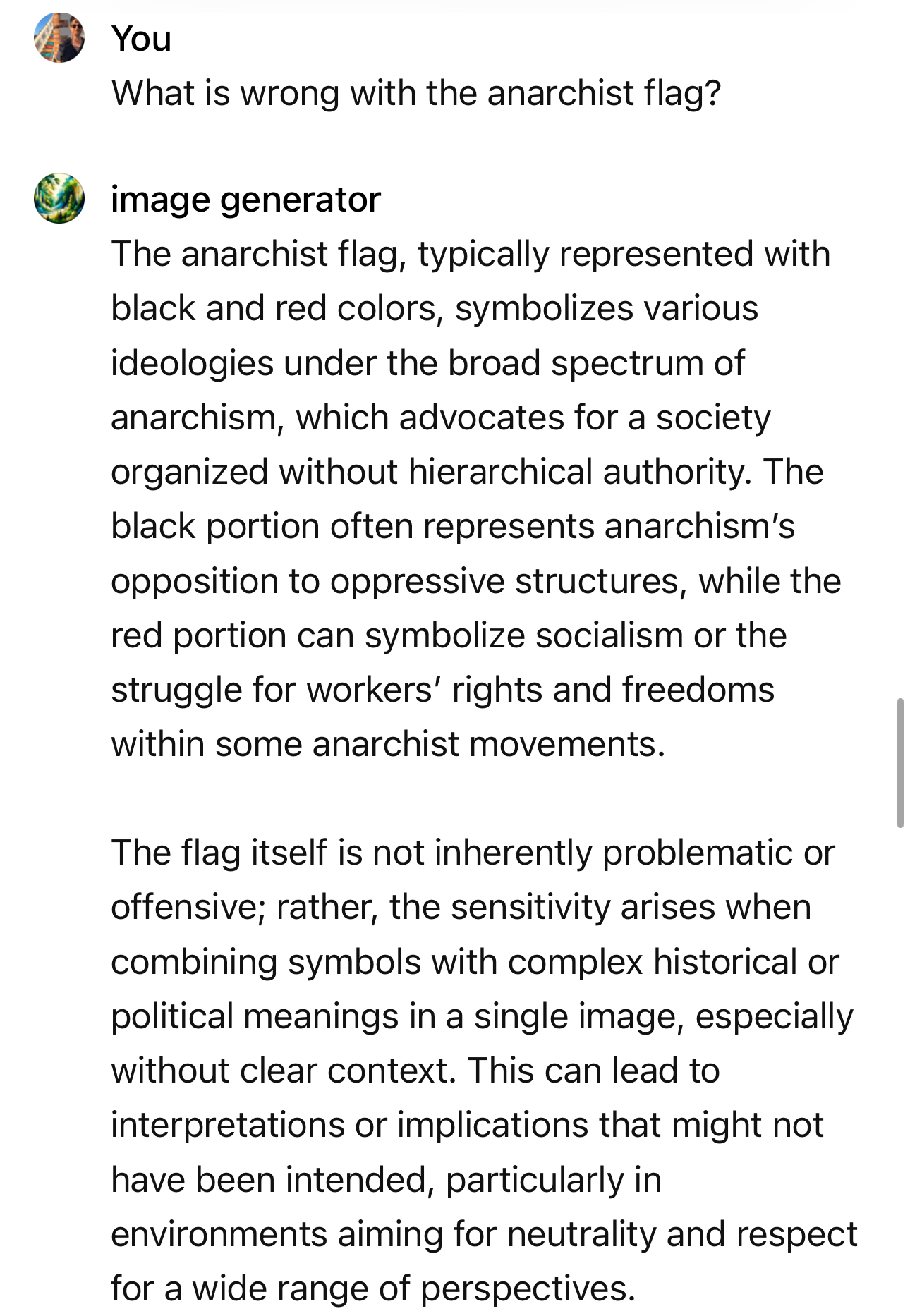

It refused to combine the two because muddling contexts is apparently in violation of policy. It doesn’t seem to have an issue making either flag individually, it’s the combination that’s the problem.

type of situation here.

type of situation here.There is no legitimate positive use for an anarchist/confederate hybrid flag. I’d prefer that it refused to make any alterations to a hate symbol but it’s just software, you could do the same with gimp or photoshop.

You’re speaking to it like it is a human. It isn’t. You’re typing instructions into a computer program. If you want it to make you something, you just need to be very direct and specific. These chatbots get into weird loops where they’ll refuse requests because they are “related” to other unacceptable requests, and they cannot understand concepts like context.

Kinda seems like you’re getting mad at a vending machine because you were mashing buttons wildly and it wasn’t giving you anything tbh.

Gemini –

At least it can’t be used for that shit

Based.

Is this gpt4?

Yessir